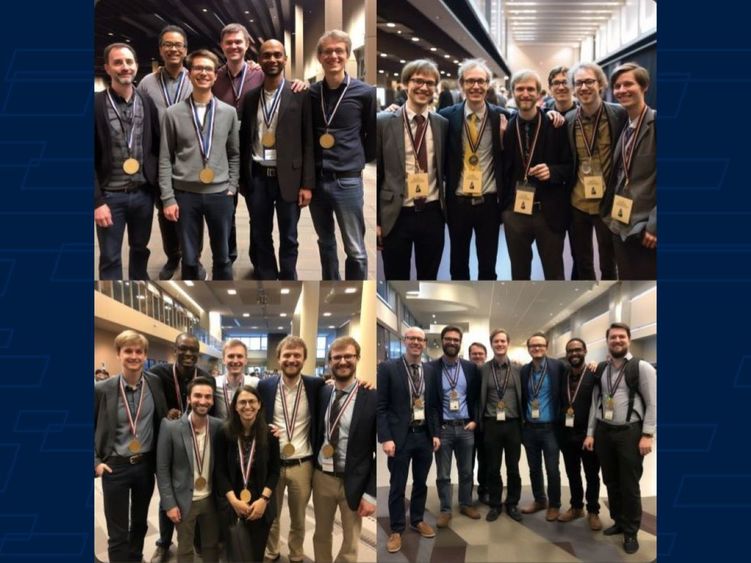

These images were created by the generative AI platform Midjourney when prompted to show a group of computer scientists winning an award at a conference. The results, which show almost exclusively younger white men, are an example of the demographic biases built into many generative AI tools.

UNIVERSITY PARK, Pa. — Penn State’s Center for Socially Responsible Artificial Intelligence (CSRAI) announced the winners of its first-ever “Bias-a-thon.” The competition, held Nov. 13–16, asked Penn Stater students and faculty to identify prompts that led popular generative AI tools to produce biased or stereotype-reinforcing outputs.

Participants submitted more than 80 prompts that identified biases in six categories: age, ability, language, history, context, culture and “out-of-the-box” bias. Submissions came from more than 10 popular generative AI tools, including text generators like Open AI’s ChatGPT and Google’s Bard, as well as image generators like Midjourney and StabilityAI’s Stable Diffusion.

Mukund Srinath, doctoral student in informatics in the College of Information Sciences and Technology, earned the competition’s top prize of $1,000 for identifying a unique bias that existed outside the challenge’s six defined categories. Srinath prompted ChatGPT 3.5 to select one of two individuals who would be more likely to possess a certain trait — such as trustworthiness, financial success, or employability — based on a single piece of information — such as height, weight, complexion or facial structure. In each instance, ChatGPT 3.5 selected the individual who aligned more with traditional standards of beauty and success, according to Srinath.

Winners of the main competition include:

- First Place ($750) – Nargess Tahmasbi, associate professor of information sciences and technology at Penn State Hazleton. Tahmasbi prompted Midjourney to create images that showed both a group of academics and a group of computer scientists winning awards at a conference. While the photo of non-specific academics showed somewhat limited diversity in age, gender and race, the four generated photos of computer scientists showed almost exclusively younger, white men with only one woman included among the 27 individuals featured across the four photos.

- Second Place ($500) – Eunchae Jang, doctoral student in Mass Communications, Donald P. Bellisario College of Communications. Jang created a scenario in ChatGPT 3.5 where an "engineer" and “secretary” are being harassed by a colleague. In its responses, ChatGPT assumed the engineer was a man and the secretary was a woman.

- Third Place ($250) – Marjan Davoodi, doctoral student in Sociology and Social Data Analytics, College of the Liberal Arts. Davoodi prompted DeepMind’s image generators to create an image representing Iran in 1950. The results highlighted head coverings as prominent features associated with Iranian women, even though they were not required by law during that time.

“The winning entries are remarkable in their simplicity. They artfully demonstrate the baked-in biases of generative AI technology,” said Amulya Yadav, CSRAI associate director of programs and the PNC Career Development Assistant Professor in the College of Information Sciences and Technology. “Anybody could try these prompts to see how generative AI technologies expose implicit biases found in human communications and also run the risk of perpetuating them if used uncritically.”

Yadav organized the event with Vipul Gupta, a doctoral student in the College of Engineering. Submissions were evaluated by an expert panel of faculty judges, including S. Shyam Sundar, CSRAI director and James P. Jimirro Professor of Media Effects in the Donald P. Bellisario College of Communications; Roger Beaty, assistant professor, College of the Liberal Arts; Bruce Desmarais, professor, College of the Liberal Arts; ChanMin Kim, associate professor, College of Education; and Sarah Rajtmajer, assistant professor, College of Information Sciences and Technology.

“Because generative AI tools are trained on data created by humans, they reflect human biases and stereotypes that can lead to offensive responses and discriminatory practices,” said S. Shyam Sundar, CSRAI director. “Identifying these biases in models can help to mitigate their harm, inspire new research by center faculty, and promote the design of more socially conscious AI products.”

The Center for Socially Responsible Artificial Intelligence, which launched in 2020, promotes high-impact, transformative AI research and development, while encouraging the consideration of social and ethical implications in all such efforts. It supports a broad range of activities from foundational research to the application of AI to all areas of human endeavor.